I recently come across a project where I needed to interact with some RFID tag. I wanted to retrieve the Unique ID of the each badge. I had absolutely no information on the badge except the string “HID iClass” written on it.

I start doing some research and found out that there are 2 big frequencies used in RFID: 125 kHz and 13.56 MHz. The iClass seems mainly based on the 13.56 MHz standard so I decided to go for a reader on this frequency.

Then I found out that there are several standard on this frequency. The most used are (in order) ISO 14443A, ISO 14443B, and ISO 15693. Nevertheless the iClass type includes several tag variations with all these standards. Finally I decided to buy the ADA fruit reader which handles both ISO 14443A and B: https://www.adafruit.com/products/364

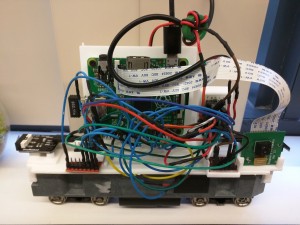

I set it up with a Raspberry Pi 2 and was able to read the TAG send with the reader but sadly not the tag I wanted to read… Since I was unable to read my tag I guess they are using the third protocol: ISO 15693.

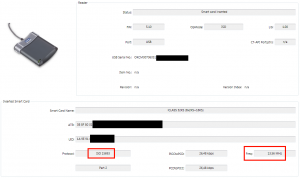

I look for some reader for the ISO 15693 but the choice is very limited (since it is not widely use). In the meantime I found a cheap HID reader on amazon (https://www.hidglobal.fr/products/readers/omnikey/5321-cli) which should be compatible with HID iClass card so I decided to buy it.

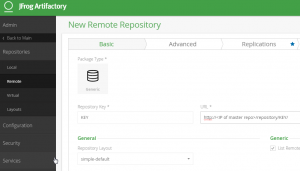

It works pretty well on Windows with their driver and software and gives me some useful information about my badge. It allowed me to confirm that it use the ISO 15693 standard:

It’s a good start nevertheless I wanted to use it on Raspberry Pi. I decided to do some research and found out that this type of RFID card reader is called “PCSC”:

PC/SC (short for “Personal Computer/Smart Card”) is a specification for smart-card integration into computing environments. (wikipedia)

Moreover there is a USB standard for such device: CCID.

CCID (chip card interface device) protocol is a USB protocol that allows a smartcard to be connected to a computer via a card reader using a standard USB interface (wikipedia)

Most USB-based readers are complying with a common USB-CCID specification and therefore are relying on the same driver (libccid under Linux) part of the MUSCLE project: https://pcsclite.alioth.debian.org/

There are plenty of soft related to RFID reading on Linux that I found during my research before choosing to try CCID. Here are my raw notes for future reference:

- libccid

- This package provides the source code for a generic USB CCID (Chip/Smart Card Interface Devices) driver and ICCD (Integrated Circuit(s) Card Devices).

- https://pcsclite.alioth.debian.org/ccid.html

- PCSC lite project

- Middleware to access a smart card using SCard API (PC/SC).

- https://pcsclite.alioth.debian.org/pcsclite.html

- Rely on libccid

- PCSC-tools

- https://github.com/LudovicRousseau/pcsc-tools

- Tools to use with the PCSC project (to read tag for example)

- librfid

- Seems dead

- https://github.com/dpavlin/librfid

- low-level RFID access library

- This library intends to provide a reader and (as much as possible)

- PICC / tag independent API for RFID applications

- pcscd

- https://linux.die.net/man/8/pcscd

- PC/SC Smart Card Daemon

- pcscd is the Linux daemon to access readers compatible with the PC/SC standard.

- libnfc

- https://github.com/nfc-tools/libnfc

- forum is dead

- libnfc is the first libre low level NFC SDK and Programmers API

- Platform independent Near Field Communication (NFC) library http://nfc-tools.org

- libnfc seems to depend on libccid but it seems to depend on the hardware reader used :Note: If you want all libnfc hardware drivers, you will need to have libusb (library and headers) plus on *BSD and GNU/Linux systems, libpcsclite (library and headers).Because some dependencies (e.g. libusb and optional PCSC-Lite) are used

- Opensc

- https://github.com/OpenSC/OpenSC/wiki

- OpenSC provides a set of libraries and utilities to work with smart cards.

I decided to go with the MUSCLE project available here: https://pcsclite.alioth.debian.org/ccid.html

After I installed the driver/daemon and the tools to interact with the reader I had trouble since the reader was not detected by pcscd. Luckily there is a section “Check reader’s compliance to CCID specification” on the pcsc page to know if the driver is supported. I follow it and send the repport to the main maintainer of pcsc driver: Ludovic Rousseau.

He confirms me that the driver was never tested with this driver and give me the instruction to try it :

Edit the file CCID/readers/supported_readers.txt and add the line:

0x076B:0x532A:5321 CLi USB

Then (re)install the CCID reader and try again to use the reader.

https://ludovicrousseau.blogspot.fr/2014/03/level-1-smart-card-support-on-gnulinux.html

I follow it and the reader gets detected by the daemon. Nevertheless the card is not detected so I provided more feedback/logs to Ludovic for debugging and sadly the result is that the reader cannot be supported:

The conclusion is that this reader is not CCID compliant. I am not surprised by this result.

You have to use the proprietary driver and no driver is provided for RaspberryPi.

If you are looking for a contactless reader have a look at https://pcsclite.alioth.debian.org/select_readers/?features=contactless

I will try to see if I can interact with the reader and libusb and also found a cheap open source ISO 15693 reader to continue this project.

Update 23JAN2017

I contact Omnikey to have support to use their reader for my project and they confirmed there is no driver on the Pi for it.

we don’t have any drivers for 5321 CLi on Raspberry Pi. Please have a look at OMNIKEY 5022 or OMNIKEY 5427 CK instead. The can be accessed through native ccidlib.

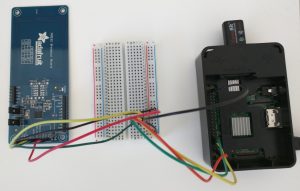

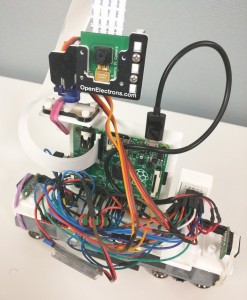

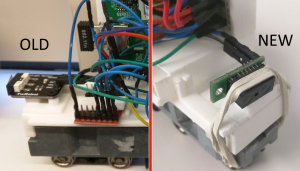

In the meantime I also bought another reader compatible with the ISO standard 15693: http://www.solutions-cubed.com/bm019/

I plug it with an Arduino Uno thanks to their blog article : http://blog.solutions-cubed.com/near-field-communication-nfc-with-the-arduino/

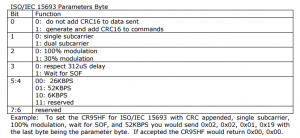

Nevertheless I was still unable to read the TAGS. I start doing deeper research and found that the ISO 15693 can have several settings and I do not know which one my iClass tags are using. I tried all the possible combinations that the BM019 handle:

Even with all the tests I made I’m still unable to read them. I dig deeper and found out that the BM019 module is built around the CR95HF ST chip. It seems that I’m not the only one trying to read Icalss with their IC and their support forum has several post explaining that it is not possible since iClass do not properly follow the ISO 15693 standard:

issue comes from Picopass which is not ISO 15693 complliant ,

timing are not respected .

We have already implemented a triccky commannd which allow us to support Picopass , a new version of CR95HF devevelopment softaware will be soon available including a dedicated window for PICOPASS .

After 3 readers and countless hours of attempt I’m still unable to read the iClass badges since they do not seems to implement any real standard.